2020/03 作者:ihunter 0 次 0

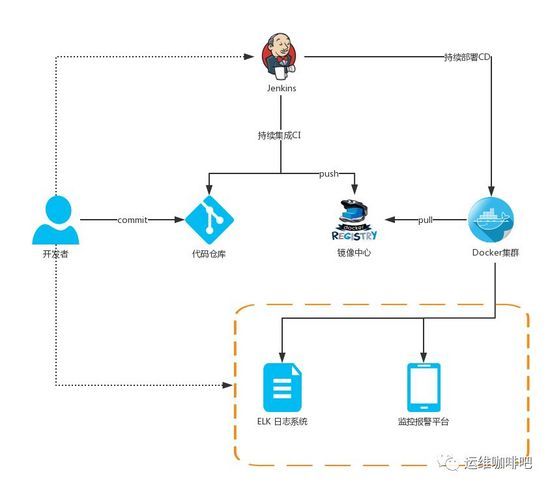

一、Prometheus 安装及配置

1、下载及解压安装包

cd /usr/local/src/

export VER="2.13.1"

wget https://github.com/prometheus/prometheus/releases/download/v${VER}/prometheus-${VER}.linux-amd64.tar.gz

mkdir -p /data0/prometheus

groupadd prometheus

useradd -g prometheus prometheus -d /data0/prometheus

tar -xvf prometheus-${VER}.linux-amd64.tar.gz

cd /usr/local/src/

mv prometheus-${VER}.linux-amd64 /data0/prometheus/prometheus_server

cd /data0/prometheus/prometheus_server/

mkdir -p {data,config,logs,bin}

mv prometheus promtool bin/

mv prometheus.yml config/

chown -R prometheus.prometheus /data0/prometheus2 、设置环境变量

vim /etc/profile PATH=/data0/prometheus/prometheus_server/bin:$PATH:$HOME/bin source /etc/profile

3、检查配置文件

promtool check config /data0/prometheus/prometheus_server/config/prometheus.yml Checking /data0/prometheus/prometheus_server/config/prometheus.yml SUCCESS: 0 rule files found

4、创建 prometheus.service 的 systemd unit 文件

4.1、常规服务

sudo tee /etc/systemd/system/prometheus.service <<-'EOF' [Unit] Description=Prometheus Documentation=https://prometheus.io/ After=network.target [Service] Type=simple User=prometheus ExecStart=/data0/prometheus/prometheus_server/bin/prometheus --config.file=/data0/prometheus/prometheus_server/config/prometheus.yml --storage.tsdb.path=/data0/prometheus/prometheus_server/data --storage.tsdb.retention=60d Restart=on-failure [Install] WantedBy=multi-user.target EOF systemctl enable prometheus.service systemctl stop prometheus.service systemctl restart prometheus.service systemctl status prometheus.service

4.2、使用 supervisor 管理 prometheus_server

yum install -y epel-release supervisor sudo tee /etc/supervisord.d/prometheus.ini<<-"EOF" [program:prometheus] # 启动程序的命令; command = /data0/prometheus/prometheus_server/bin/prometheus --config.file=/data0/prometheus/prometheus_server/config/prometheus.yml --storage.tsdb.path=/data0/prometheus/prometheus_server/data --storage.tsdb.retention=60d # 在supervisord启动的时候也自动启动; autostart = true # 程序异常退出后自动重启; autorestart = true # 启动5秒后没有异常退出,就当作已经正常启动了; startsecs = 5 # 启动失败自动重试次数,默认是3; startretries = 3 # 启动程序的用户; user = prometheus # 把stderr重定向到stdout,默认false; redirect_stderr = true # 标准日志输出; stdout_logfile=/data0/prometheus/prometheus_server/logs/out-prometheus.log # 错误日志输出; stderr_logfile=/data0/prometheus/prometheus_server/logs/err-prometheus.log # 标准日志文件大小,默认50MB; stdout_logfile_maxbytes = 20MB # 标准日志文件备份数; stdout_logfile_backups = 20 EOF systemctl daemon-reload systemctl enable supervisord systemctl stop supervisord systemctl restart supervisord supervisorctl restart prometheus supervisorctl status

5、prometheus.yml 配置文件

#创建Alertmanager告警规则文件 mkdir -p /data0/prometheus/prometheus_server/rules/ touch /data0/prometheus/prometheus_server/rules/node_down.yml touch /data0/prometheus/prometheus_server/rules/memory_over.yml touch /data0/prometheus/prometheus_server/rules/disk_over.yml touch /data0/prometheus/prometheus_server/rules/cpu_over.yml #prometheus配置文件 cat > /data0/prometheus/prometheus_server/config/prometheus.yml << \EOF # my global config global: scrape_interval: 15s # 设置抓取(pull)时间间隔,默认是1m evaluation_interval: 15s # 设置rules评估时间间隔,默认是1m # scrape_timeout is set to the global default (10s). # 告警管理配置,默认配置 alerting: alertmanagers: - static_configs: - targets: - 192.168.56.11:9093 # 这里修改为 alertmanagers 的地址 # 加载rules,并根据设置的时间间隔定期评估 rule_files: # - "first_rules.yml" # - "second_rules.yml" - "/data0/prometheus/prometheus_server/rules/node_down.yml" # 实例存活报警规则文件 - "/data0/prometheus/prometheus_server/rules/memory_over.yml" # 内存报警规则文件 - "/data0/prometheus/prometheus_server/rules/disk_over.yml" # 磁盘报警规则文件 - "/data0/prometheus/prometheus_server/rules/cpu_over.yml" # cpu报警规则文件 # 抓取(pull),即监控目标配置 # 默认只有主机本身的监控配置 scrape_configs: # The job name is added as a label `job=` to any timeseries scraped from this config. - job_name: 'prometheus' # metrics_path defaults to '/metrics' # scheme defaults to 'http'. # 可覆盖全局配置设置的抓取间隔,由15秒重写成5秒。 scrape_interval: 10s static_configs: - targets: ['localhost:9090', 'localhost:9100'] - job_name: 'DMC_HOST' file_sd_configs: - files: ['./hosts.json'] # 被监控的主机,可以通过static_configs罗列所有机器,这里通过file_sd_configs参数加载文件的形式读取 # 被监控的主机,可以json或yaml格式书写,我这里以json格式书写,target里面写监控机器的ip,labels非必须,可以由你自己定 EOF #file_sd_configs参数形式配置主机列表 cat > /data0/prometheus/prometheus_server/config/hosts.json << \EOF [ { "targets": [ "192.168.56.11:9100", "192.168.56.12:9100", "192.168.56.13:9100" ], "labels": { "service": "db_node" } }, { "targets": [ "192.168.56.14:9100", "192.168.56.15:9100", "192.168.56.16:9100" ], "labels": { "service": "web_node" } } ] EOF # 服务器存活报警 cat > /data0/prometheus/prometheus_server/rules/node_down.yml <<\EOF groups: - name: 实例存活告警规则 rules: - alert: 实例存活告警 expr: up == 0 for: 1m labels: user: prometheus severity: warning annotations: description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minutes." EOF # mem报警 cat > /data0/prometheus/prometheus_server/rules/memory_over.yml <<\EOF groups: - name: 内存报警规则 rules: - alert: 内存使用率告警 expr: (node_memory_MemTotal_bytes - (node_memory_MemFree_bytes+node_memory_Buffers_bytes+node_memory_Cached_bytes )) / node_memory_MemTotal_bytes * 100 > 80 for: 1m labels: user: prometheus severity: warning annotations: description: "服务器: 内存使用超过80%!(当前值: {{ $value }}%)" EOF # disk报警 cat > /data0/prometheus/prometheus_server/rules/disk_over.yml <<\EOF groups: - name: 磁盘报警规则 rules: - alert: 磁盘使用率告警 expr: (node_filesystem_size_bytes - node_filesystem_avail_bytes) / node_filesystem_size_bytes * 100 > 80 for: 1m labels: user: prometheus severity: warning annotations: description: "服务器: 磁盘设备: 使用超过80%!(挂载点: {{ $labels.mountpoint }} 当前值: {{ $value }}%)" EOF # cpu报警 cat > /data0/prometheus/prometheus_server/rules/cpu_over.yml <<\EOF groups: - name: CPU报警规则 rules: - alert: CPU使用率告警 expr: 100 - (avg by (instance)(irate(node_cpu_seconds_total{mode="idle"}[1m]) )) * 100 > 90 for: 1m labels: user: prometheus severity: warning annotations: description: "服务器: CPU使用超过90%!(当前值: {{ $value }}%)" EOF

6、查看 UI

Prometheus 自带有简单的 UI

http://192.168.56.11:9090/

http://192.168.56.11:9090/targets

二、node_exporter 安装及配置

1、下载及解压安装包

cd /usr/local/src/

export VER="0.18.1"

wget https://github.com/prometheus/node_exporter/releases/download/v${VER}/node_exporter-${VER}.linux-amd64.tar.gz

mkdir -p /data0/prometheus

groupadd prometheus

useradd -g prometheus prometheus -d /data0/prometheus

tar -xvf node_exporter-${VER}.linux-amd64.tar.gz

cd /usr/local/src/

mv node_exporter-${VER}.linux-amd64 /data0/prometheus/node_exporter

chown -R prometheus.prometheus /data0/prometheus2、创建 node_exporter.service的 systemd unit 文件

centos 下创建服务

cat > /usr/lib/systemd/system/node_exporter.service <ubuntu 下创建服务

cat > /etc/systemd/system/node_exporter.service <3、启动服务

systemctl daemon-reload systemctl stop node_exporter.service systemctl enable node_exporter.service systemctl restart node_exporter.service4、运行状态

systemctl status node_exporter.service

5、客户监控端数据汇报访问:http://192.168.56.11:9100/metrics 查看从 exporter 具体能抓到的数据。

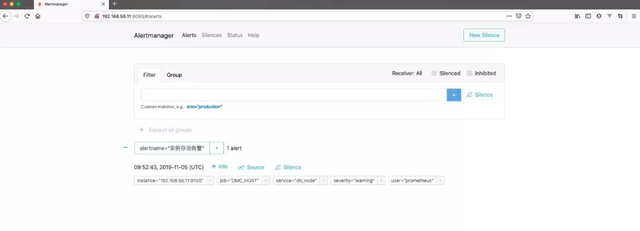

三、部署 Alertmanager 钉钉报警

1、下载及解压安装包

cd /usr/local/src/ export VER="0.19.0" wget https://github.com/prometheus/alertmanager/releases/download/v${VER}/alertmanager-${VER}.linux-amd64.tar.gz mkdir -p /data0/prometheus groupadd prometheus useradd -g prometheus prometheus -d /data0/prometheus tar -xvf alertmanager-${VER}.linux-amd64.tar.gz cd /usr/local/src/ mv alertmanager-${VER}.linux-amd64 /data0/prometheus/alertmanager chown -R prometheus.prometheus /data0/prometheus2、配置 Alertmanager

alertmanager 的 webhook 集成了钉钉报警,钉钉机器人对文件格式有严格要求,所以必须通过特定的格式转换,才能发送给你钉钉的机器人。有人已经t贴心的为大家写了转换插件,那我们也就直接拿来用吧!

( https://github.com/timonwong/prometheus-webhook-dingtalk.git )

cat >/data0/prometheus/alertmanager/alertmanager.yml<<-"EOF" # 全局配置项 global: resolve_timeout: 5m # 处理超时时间,默认为5min # 定义路由树信息 route: group_by: [alertname] # 报警分组依据 receiver: ops_notify # 设置默认接收人 group_wait: 30s # 最初即第一次等待多久时间发送一组警报的通知 group_interval: 60s # 在发送新警报前的等待时间 repeat_interval: 1h # 重复发送告警时间。默认1h routes: - receiver: ops_notify # 基础告警通知 group_wait: 10s match_re: alertname: 实例存活告警|磁盘使用率告警 # 匹配告警规则中的名称发送 - receiver: info_notify # 消息告警通知 group_wait: 10s match_re: alertname: 内存使用率告警|CPU使用率告警 # 定义基础告警接收者 receivers: - name: ops_notify webhook_configs: - url: http://localhost:8060/dingtalk/ops_dingding/send send_resolved: true # 警报被解决之后是否通知 # 定义消息告警接收者 - name: info_notify webhook_configs: - url: http://localhost:8060/dingtalk/info_dingding/send send_resolved: true # 一个inhibition规则是在与另一组匹配器匹配的警报存在的条件下,使匹配一组匹配器的警报失效的规则。两个警报必须具有一组相同的标签。 inhibit_rules: - source_match: severity: 'critical' target_match: severity: 'warning' equal: ['alertname', 'dev', 'instance'] EOF3、启动 Alertmanager

cat >/lib/systemd/system/alertmanager.service<<\EOF [Unit] Description=Prometheus: the alerting system Documentation=http://prometheus.io/docs/ After=prometheus.service [Service] ExecStart=/data0/prometheus/alertmanager/alertmanager --config.file=/data0/prometheus/alertmanager/alertmanager.yml Restart=always StartLimitInterval=0 RestartSec=10 [Install] WantedBy=multi-user.target EOF systemctl enable alertmanager.service systemctl stop alertmanager.service systemctl restart alertmanager.service systemctl status alertmanager.service #查看端口 netstat -anpt | grep 90934、将钉钉接入 Prometheus AlertManager WebHook

#命令行测试机器人发送消息,验证是否可以发送成功,有的时候prometheus-webhook-dingtalk会报422的错误,就是因为钉钉的安全限制(这里的安全策略是发送消息,必须包含prometheus才可以正常发送) curl -H "Content-Type: application/json" -d '{"msgtype":"text","text":{"content":"prometheus alert test"}}' https://oapi.dingtalk.com/robot/send?access_token=18f977769d50518e9d4f99a0d5dc1376f05615b61ea3639a87f106459f75b5c9 curl -H "Content-Type: application/json" -d '{"msgtype":"text","text":{"content":"prometheus alert test"}}' https://oapi.dingtalk.com/robot/send?access_token=11a0496d0af689d56a5861ae34dc47d9f1607aee6f342747442cc83e367152234.1、二进制包方式部署插件

cd /usr/local/src/ export VER="0.3.0" wget https://github.com/timonwong/prometheus-webhook-dingtalk/releases/download/v${VER}/prometheus-webhook-dingtalk-${VER}.linux-amd64.tar.gz tar -zxvf prometheus-webhook-dingtalk-${VER}.linux-amd64.tar.gz mv prometheus-webhook-dingtalk-${VER}.linux-amd64 /data0/prometheus/alertmanager/prometheus-webhook-dingtalk #使用方法:prometheus-webhook-dingtalk --ding.profile=钉钉接收群组的值=webhook的值 cat > /etc/systemd/system/prometheus-webhook-dingtalk.service<<\EOF [Unit] Description=prometheus-webhook-dingtalk After=network-online.target [Service] Restart=on-failure ExecStart=/data0/prometheus/alertmanager/prometheus-webhook-dingtalk/prometheus-webhook-dingtalk \ --ding.profile=ops_dingding=https://oapi.dingtalk.com/robot/send?access_token=18f977769d50518e9d4f99a0d5dc1376f05615b61ea3639a87f106459f75b5c9 \ --ding.profile=info_dingding=https://oapi.dingtalk.com/robot/send?access_token=11a0496d0af689d56a5861ae34dc47d9f1607aee6f342747442cc83e36715223 [Install] WantedBy=multi-user.target EOF systemctl daemon-reload systemctl stop prometheus-webhook-dingtalk systemctl restart prometheus-webhook-dingtalk systemctl status prometheus-webhook-dingtalk netstat -nltup|grep 80604.2、docker 方式部署插件

docker pull timonwong/prometheus-webhook-dingtalk:v0.3.0 #docker run -d --restart always -p 8060:8060 timonwong/prometheus-webhook-dingtalk:v0.3.0 --ding.profile="= " docker run -d --restart always -p 8060:8060 timonwong/prometheus-webhook-dingtalk:v0.3.0 --ding.profile="ops_dingding=https://oapi.dingtalk.com/robot/send?access_token=18f977769d50518e9d4f99a0d5dc1376f05615b61ea3639a87f106459f75b5c9" --ding.profile="info_dingding=https://oapi.dingtalk.com/robot/send?access_token=11a0496d0af689d56a5861ae34dc47d9f1607aee6f342747442cc83e36715223" 这里解释一下两个变量: :prometheus-webhook-dingtalk 支持多个钉钉 webhook,不同 webhook 就是靠名字对应到 URL 来做映射的。要支持多个钉钉 webhook,可以用多个 --ding.profile 参数的方式支持,例如:sudo docker run -d --restart always -p 8060:8060 timonwong/prometheus-webhook-dingtalk:v0.3.0 --ding.profile="webhook1=https://oapi.dingtalk.com/robot/send?access_token=token1" --ding.profile="webhook2=https://oapi.dingtalk.com/robot/send?access_token=token2"。而名字和 URL 的对应规则如下,ding.profile="webhook1=......",对应的 API URL 为:http://localhost:8060/dingtalk/webhook1/send :这个就是之前获取的钉钉 webhook 4.3、源码方式部署插件

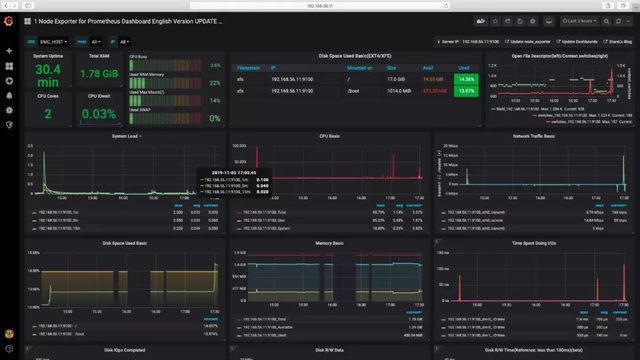

#安装golang环境 cd /usr/local/src/ wget https://dl.google.com/go/go1.13.4.linux-amd64.tar.gz tar -zxvf go1.13.4.linux-amd64.tar.gz mv go/ /usr/local/ #vim /etc/profile export GOROOT=/usr/local/go export PATH=$PATH:$GOROOT/bin #添加环境变量GOPATH mkdir -p /opt/path export GOPATH=/opt/path #若 $GOPATH/bin 没有加入$PATH中,你需要执行将其可执行文件移动到$GOBIN下 export GOPATH=/opt/path export PATH=$PATH:$GOROOT/bin:$GOPATH/bin source /etc/profile #下载插件 cd /usr/local/src/ git clone https://github.com/timonwong/prometheus-webhook-dingtalk.git cd prometheus-webhook-dingtalk go get github.com/timonwong/prometheus-webhook-dingtalk/cmd/prometheus-webhook-dingtalk make #(make成功后,会产生一个prometheus-webhook-dingtalk二进制文件) #将钉钉告警插件拷贝到alertmanager目录 cp prometheus-webhook-dingtalk /data0/prometheus/alertmanager/ #启动服务 nohup /data0/prometheus/alertmanager/prometheus-webhook-dingtalk/prometheus-webhook-dingtalk --ding.profile="ops_dingding=https://oapi.dingtalk.com/robot/send?access_token=18f977769d50518e9d4f99a0d5dc1376f05615b61ea3639a87f106459f75b5c9" --ding.profile="info_dingding=https://oapi.dingtalk.com/robot/send?access_token=11a0496d0af689d56a5861ae34dc47d9f1607aee6f342747442cc83e36715223" 2>&1 1>/tmp/dingding.log & #检查端口 netstat -anpt | grep 8060四、Grafana 安装及配置

1、下载及安装

cd /usr/local/src/ export VER="6.4.3" wget https://dl.grafana.com/oss/release/grafana-${VER}-1.x86_64.rpm yum localinstall -y grafana-${VER}-1.x86_64.rpm

2、启动服务systemctl daemon-reload systemctl enable grafana-server.service systemctl stop grafana-server.service systemctl restart grafana-server.service

3、访问 WEB 界面默认账号/密码:admin/admin http://192.168.56.11:3000

4、Grafana 添加数据

在登陆首页,点击"Configuration-Data Sources"按钮,跳转到添加数据源页面,配置如下: Name: prometheus Type: prometheus URL: http://192.168.56.11:9090 Access: Server 取消Default的勾选,其余默认,点击"Add",如下: 需要安装饼图的插件 grafana-cli plugins install grafana-piechart-panel systemctl restart grafana-server.service 请确保安装后能正常添加饼图。 安装consul数据源插件 grafana-cli plugins install sbueringer-consul-datasource systemctl restart grafana-server.service五、替换 grafana 的 dashboards